When Teaching Becomes Play

From Distill to Hamming

Chris Olah was sitting across from me in Google Brain’s San Francisco office explaining Distill. The idea was that scientific communication could come alive through interactive interfaces.

Distill wasn’t meant to be another publication venue. It was an argument that the medium itself mattered. Instead of static equations and frozen figures, readers could touch the concepts. JavaScript was the bridge between mathematical abstraction and human intuition.

Of course, there was a caveat this kind of interaction required knowledge of Javascript.

Fast-forward: LLMs as game designers and teaching collaborators

When large language models arrived, so did the ability to generate interactive content through words rather than code it line-by-line.

Today, if you want to make a small game that illustrates e.g. Bayes’ rule, you can simply describe it to an LLM. You just say:

“Create a React app that be viewed on a mobile browser to visualize how prior and likelihood combine to form a posterior, with sliders for both.”

…and a few seconds later, you have a working frontend.

Pair that with a global CDN (e.g. Vercel, Cloudflare, etc.), and you’ve got instant worldwide distribution of a concept in pure-JavaScript. No backend, database, or DevOps. The web becomes a lightweight teaching platform.

This changes the economics of pedagogy.

Where Distill once asked: “What if explanation could be interactive?”,

we can now ask: “What if interaction itself could be authored conversationally?”

Why games are a powerful medium for learning

Games are the most natural teaching technology we’ve ever invented.

They reward exploration, expose feedback loops, and invite persistence.

When structured around an idea like a theorem, a model, or a process, they become living diagrams.

A well-designed educational game does what a textbook cannot: it builds intuition through experience. The player doesn’t memorize the rule: they feel it.

For abstract fields like machine learning or information theory, that kinesthetic dimension can make the difference between rote knowledge and deep understanding.

Building Hamming: a Wordle for coding theory

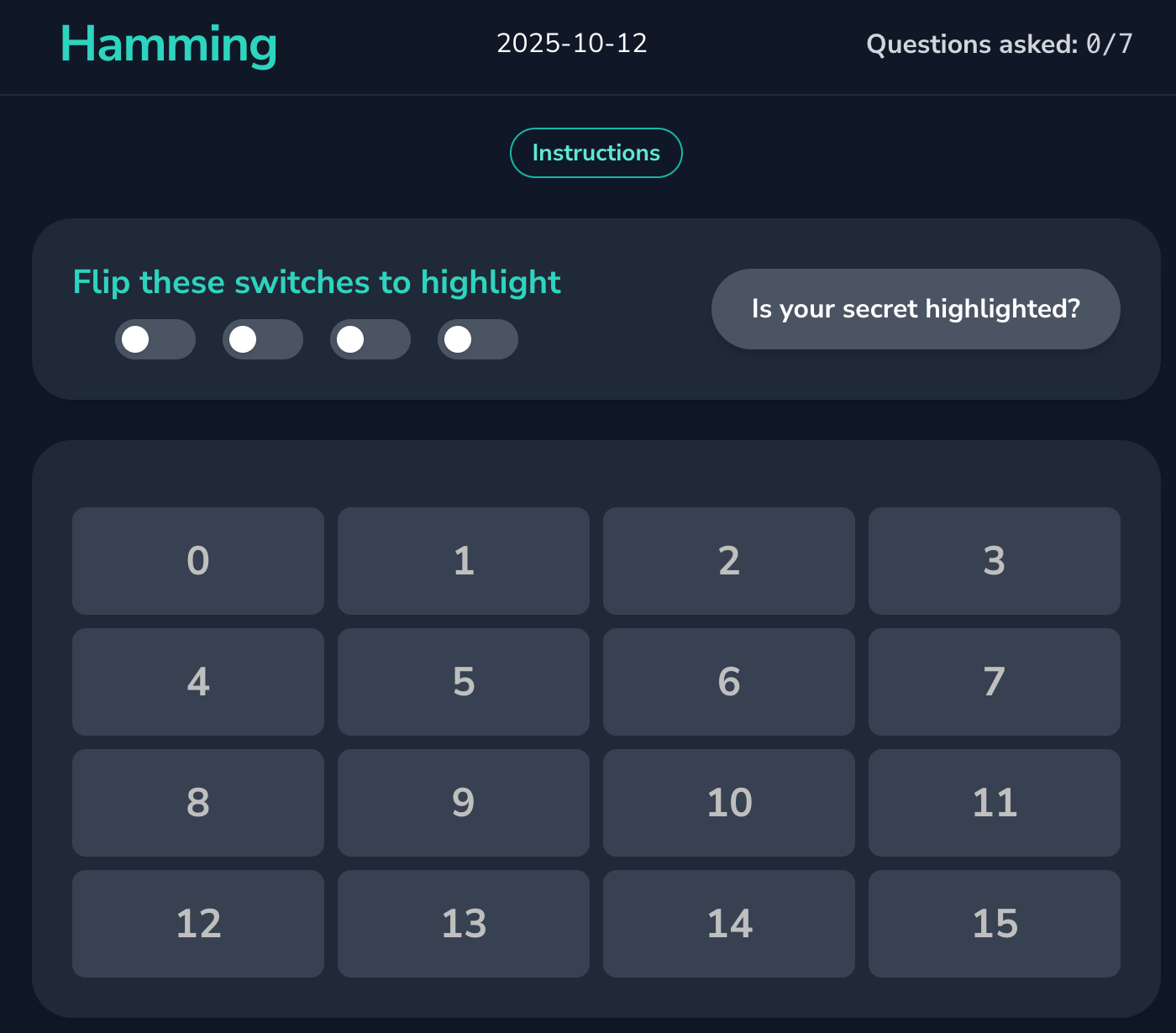

I’ve experimented with this idea myself. The result is Hamming, a browser-based game inspired by Wordle but grounded in information theory and error-correcting codes.

Each game of Hamming is a puzzle where you get 7 rounds of questions to find a mystery number, but one of the answers will be a lie, i.e. the opposite of the truthful answer to the question.

It’s playful, but it teaches a genuine concept: how redundancy allows communication to survive noise. It also allows one to play with the structure of a Hamming (7, 4) code.

I wrote the first version of Hamming describing the rules to an LLM in natural language, iterating on edge cases, and letting the model scaffold the JavaScript. Then I deployed it to a static site using Cloudflare’s CDN. From prompt to playable game, the whole process took less than an afternoon.

You can try it yourself at hamming.krisheswaran.com.

Reflection

Nearly a decade ago, in that corner of Google Brain, Chris was showing me how JavaScript could animate understanding. Now, we can do the same thing, but with the machine writing much of the code for us.

What hasn’t changed is the goal: to make abstract ideas tangible.

Play with it: hamming.krisheswaran.com